I hate jargon with a white-hot passion; the same goes for lingo and terminology. I try to avoid jargon like the plague. (Apparently, however, that fussiness doesn’t extend to clichés.) We’ve probably all noticed that some folks toss out buzzwords to impress others, perhaps as an attempt to bolster their own credibility and camouflage their brittle understanding (remember the old t-shirt, “If you can’t dazzle them with your brilliance, then baffle them with [expletive deleted]).

My favorite teacher used to say, “If you don’t know your subject well enough to explain it simply and clearly to an intelligent fifth grader without the use of lingo and jargon, then you need to keep studying.” So, I especially hate it when I realize that I’ve slipped into using some undefined terminology while teaching.

And the field of artificial intelligence is full of lingo, jargon, and impenetrable acronyms and initialisms: LLMs, RAG, context windows, inference, hallucinations, interpretability, embeddings, vectors, transformers, and the list goes on and on (seriously, I’ve got a list of about 100 awful AI-related words and phrases I’ve collected over the years).

You don’t need to know any of that vocabulary to get started with generative AI (which should probably be on the list, too), especially in their most popular form today: chatbots. At the heart of these tools is an understanding of natural language, so users can just start speaking with them in their everyday voice to start using these tools.

But, today, to really get these tools to stand up and sing, a little understanding of how they work goes a long way. Two or three or five years from now, as these tools continue to become more intelligent and capable (as seen every month for the past two years), then perhaps no training will be necessary for new users to achieve results as good as an experienced user. And that day will probably come quicker than we’re ready for, but for today, a little bit of knowledge still goes a long way.

Often when we hear jargon or lingo used, we may try for a moment to discern the meaning from the context in which it’s used. But about as often, the context alone isn’t enough to help us understand the meaning of the new word. So, we press on, unenlightened, frustrated, and with a vague sense that we’re missing pieces of a puzzle.

Another favorite teacher used to say, “When you hit a speed bump, don’t just zoom over and past it–there are often interesting things to see around speed bumps. Instead: stop, look, learn.” I would like to encourage users of AI, when some new terminology—like a metaphorical speed bump—jumps into your path, instead of just speeding past, stop, look, and learn. Or, at least, start making a list of these new and unfamiliar terms.

Another observation from the past two years: the frequency with which jargon is used can be an indication of its usefulness. Not always: sometimes hype and marketing can explain a buzzword’s popularity. But often, if some jargon keeps popping-up in different contexts, then there is often something worth knowing there. Actually, I have found that frequently to be the case with respect to words and ideas surrounding AI—so much so that I’ve been collecting that list of unfamiliar terminology, and helping students, friends, and colleagues by unpacking the simple and useful meaning behind the Loathsome Jargon.

This post inaugurates a new weekly feature: Loathsome Jargon: An AI Glossary for Genealogists. None, I imagine, will be as long as this initial post, and I will gather this basket of dictionary deplorables onto one page which will grow each week. My hope and intent is to simply and clearly explain the meaning of these worrisome words. And, perhaps more importantly, show how the ideas and meaning behind the jargon can unlock usefulness and productivity in your AI genealogy. I intend this column to be a regular Monday feature, to accompany the occasional updates on my “2025 AI Genealogy Do-Over,” and my weekly “52 Ancestors in 52 Weeks” and “Fun Prompt Friday” posts; we had a fairly substantial Snow Day in Virginia yesterday, so this announcement and first entry in the Loathsome Jargon glossary are getting to you on a Tuesday this week (never let the perfect be the enemy of the good). So, without further ado, let me introduce you to our first entry in the Loathsome Jargon glossary (I told you that clichés didn’t make me flinch).

Loathsome Jargon #1: “Jagged Frontier”

Our first bit of Loathsome Jargon comes from Prof Ethan Mollick of Wharton who studies AI and business, and from whom I have stolen learned so much. A saying of his that I repeat frequently is that “LLMs are weird.” Large language models are also amazing and hold frightening potential and to call them a mixed blessing or double-edged sword is an understatement—they are also a garbage barge full of trouble: what went into them, what comes out of them, what they might one day become. But perhaps above all, they are weird: they make things up (“hallucinations” will be our Bad Word of the Week before too long) and they’ll give you two different answers to an identical question (“indeterminate” is in the Crazy Queue) and not even the people who built them can tell you exactly why they respond the way they do (“mechanistic interpretability” is NOT in the Top Twenty Troublesome Terms, but we’ll get to it eventually).

As Mollick writes, “AI is weird. No one actually knows the full range of capabilities of the most advanced Large Language Models, like GPT-4. No one really knows the best ways to use them, or the conditions under which they fail. There is no instruction manual. On some tasks AI is immensely powerful, and on others it fails completely or subtly. And, unless you use AI a lot, you won’t know which is which.”1

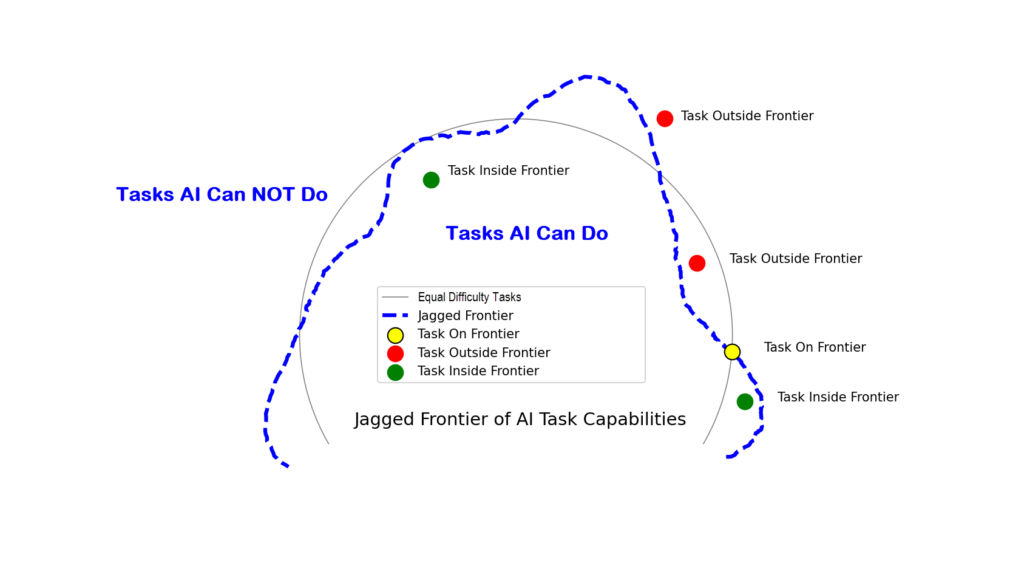

AI’s surprising abilities, ease of use, and unpredictability make it hard for users to fully understand its strengths and weaknesses. Some tasks that seem complex (like idea generation) are easy for AI, while simpler tasks (like basic math) can be difficult. This creates a “jagged frontier,” where similar tasks vary in how well AI performs them.2

Another characteristic of the jagged frontier that was less evident in September 2023 when Mollick coined the term (but very evident now), is how the jagged frontier can move. Look at the shape above: the blue dashed line representing the jagged frontier somewhat resembles an amoeba. Mollick’s original metaphor evoked images of a fortress wall, which now seem far too fixed and permanent. Rather, more like a rapidly growing amoeba, the jagged frontier represents the growing edge of AI abilities.

What does all this mean for the family historian and genealogist? First, this knowledge offers the new user some reassurance that the weirdness they experience with AI is common, if not universal. Second, this concept helps us understand why some genealogical tasks we attempt with AI succeeds while similar tasks may fail. And third, having experienced the growing and emergent capabilities of AI, we have hope and reasonable expectations that tasks that fail today might be possible with new models, or, as I like to say, today’s limits are tomorrow’s breakthroughs.

And what can we do with this information today? This is the most important thing: map your experience of the jagged frontier by keeping track of your failures. Tracking your AI failures will do a couple of things for you: one, you can see where you are spending your time and efforts unproductively (where you might be wasting time today), and two, your list of failures will become your list of test cases for new models, that is, a task that failed with GPT-4 may work with GPT-5.

So, that’s our first look at Loathsome Jargon. If you have a bit of troublesome AI terminology with which you’d like help, please let me know.

Sources:

- Ethan Mollick, “Centaurs and Cyborgs on the Jagged Frontier,” September 16, 2023, available at https://www.oneusefulthing.org/p/centaurs-and-cyborgs-on-the-jagged, accessed January 7, 2025. ↩︎

- Ethan Mollick, et al, “Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality” Harvard Business School Technology & Operations Management Unit Working Paper No 24-013, The Wharton School Research Paper, September 15, 2023, available at http://dx.doi.org/10.2139/ssrn.4573321, page 4, accessed January 7, 2025. ↩︎