In March, the company behind ChatGPT, OpenAI, teased that new ways to interact with the AI would be coming, in addition to typing and copy-paste; that is, they previewed that we would be able to use images and voice with ChatGPT. Well, it took longer than I had hoped, but those abilities are being rolled out now and over the next two weeks for Plus and Enterprise users. As a Plus subscriber, I don’t have access yet, but we should in the next few days.

Today’s OpenAI announcement is here: https://openai.com/blog/chatgpt-can-now-see-hear-and-speak. But, in a nutshell, OpenAI has introduced voice and image interaction capabilities to ChatGPT, allowing users to have voice conversations and visually show the AI content for enhanced interactions.

Since OpenAI teased these abilities back in March, there has been a lot of daydreaming about new use cases that might be possible with these new abilities. Back then, we were abuzz with speculation on the unfolding potential of GPT-4’s capabilities. The promise of visual input suggested we might soon be feeding the AI everything from portraits to crucial historical documents. A standout notion was the possibility of GPT-4 recognizing and extracting text or even deciphering handwriting from genealogical records such as birth, marriage, or death certificates. The dream? To seamlessly drop a trove of such images into the system and watch as it meticulously extracts every piece of data, repackaging it into various formats like narratives, tables, CSVs, GEDCOMs, or JSONs. The vision was a genealogist’s dream: imagine an AI script sifting through a digital folder bursting with historical records, only to generate a detailed, sourced GEDCOM file, suggesting ties and connections between all mentioned parties. It truly felt like we were on the cusp of revolutionizing our field.

Our expectations have tempered quite a bit in the past six months, but the genealogical exploration coming to ChatGPT Plus in the next weeks and months is exciting.

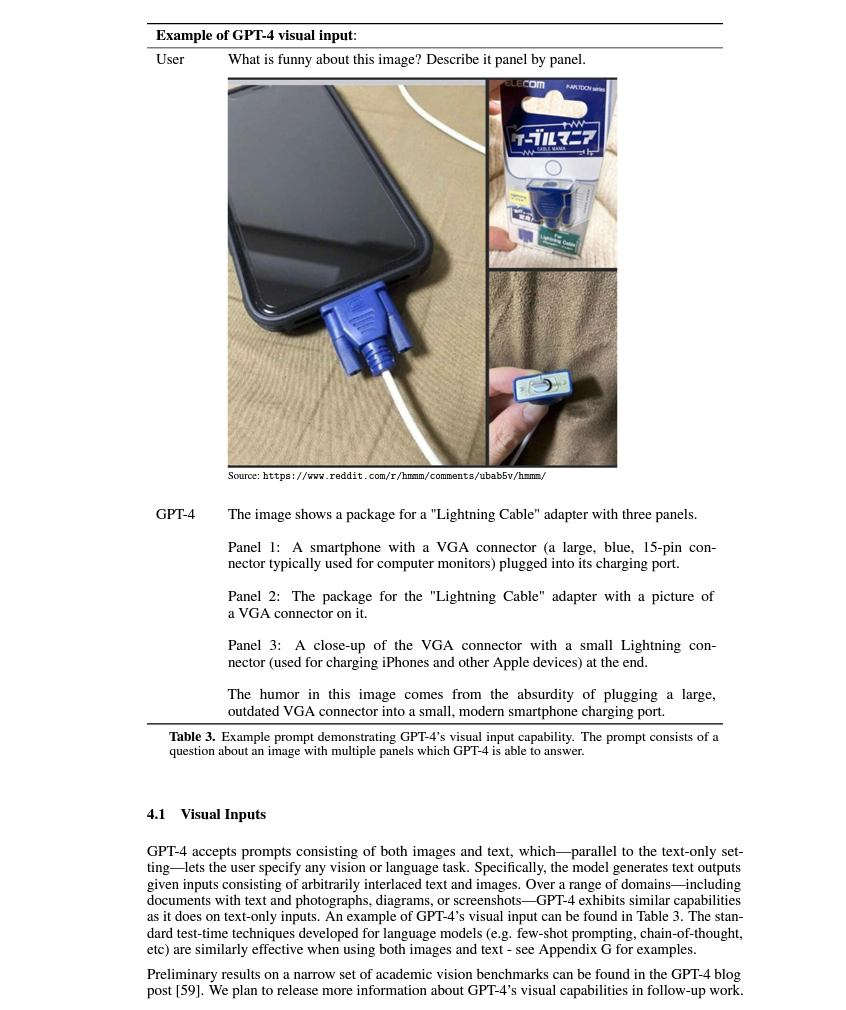

In their March tease of these abilities, OpenAI released this example of what might be done with visual input (this image is from page 9 of the full report):

Summer is over; time to get busy. I look forward to hearing about your discoveries and I’m excited about sharing mine in the weeks and months to come.

3 thoughts on “ChatGPT Can Now See, Hear, and Speak”

Comments are closed.