The AI-space has been ablaze with news about “reasoning” models since the release of DeepSeek’s reasoning model “R1” (which grabbed attention for its: 1) strength; 2) non-US origins; 3) open-source availability; and 4) cheap access). Now, as OpenAI releases their next reasoning models – “o3-mini” and “o3-mini-high” – it’s worth understanding what this “reasoning” buzz is all about. Here’s a higher-level approach and a more concrete example:

What’s Actually Happening Here?

Oversimplified, a “reasoning” model takes a few moments to refine its response before it gives you an answer. The Loathsome Jargon you may hear is “test-time thinking” or—the truly hideous Loathsome Jargon—”inference compute”. Functionally, it’s as if the model, before providing its response, asks itself if it understands the intent of your prompt, thinks things through “step-by-step,” and reconsiders its response and revises it, all before responding to your prompt. You experience this “reasoning” during use when you see the chatbot taking from a few seconds to a few minutes before responding to your prompt–seriously, reasoning models do not excel at back-and-forth conversation.

Current State of Play

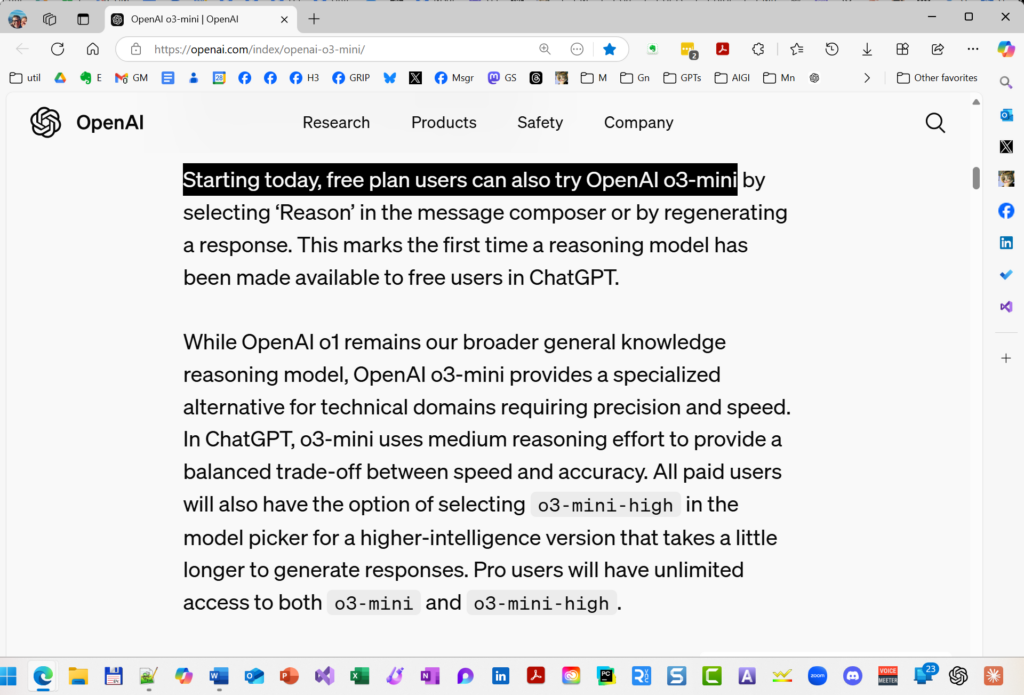

OpenAI has launched two new AI reasoning models, o3-mini and o3-mini-high, pushing forward their capabilities in coding, science, and complex problem-solving while responding to competition from DeepSeek’s recent advances. The o3-mini model delivers responses 24% faster than its predecessor while maintaining comparable performance to o1, and notably, o3-mini is the first reasoning model available to free ChatGPT users. The o3-mini-high version offers enhanced performance for paid users, with both versions featuring adjustable reasoning effort levels (low, medium, or high) to balance speed and accuracy. Access to o3-mini allows unlimited usage for Pro subscribers ($200/month), while Plus and Team plan users ($20/$50/month) are limited to 150 messages per day (triple their previous limit), with Enterprise and Educational customers gaining access within a week. The model also introduces integrated search capabilities, providing up-to-date answers with web source links.

Because this sub-type of LLM is newer and less-used till now, their practical application is still being discovered, kinda like when GPT-4 first dropped in March 2023. In a nutshell, reasoning models complement rather than replace traditional LLMs, with different strengths and uses. Reasoning models are said to excel at PLANNING and ITERATIVE ANALYSIS. A perfect example appeared in “I Asked ChatGPT’s New ‘Reasoning’ Model to Craft a Research Plan–Here’s What Happened” (December 6, 2024), which tested o1’s planning capabilities through a complex genealogical research task. Notably, I departed from my usual practice of iterative chatbot conversation, instead testing the model’s initial, single-shot planning capability – exactly the kind of structured, thoughtful task where reasoning models shine1.

Practical Application

Really oversimplifying: Traditional models (ChatGPT GPT-4o, Claude 3.5 Sonnet, and Google Gemini) work best for most LLM processing tasks (summarization, extraction, generation, transformation). Reserve “o1”, “R1”, and now the new “o3” variants for when you’d actually like the model to spend some time on a more considered response, such as when asking for a plan, strategy, or analysis.

Using These Tools Effectively

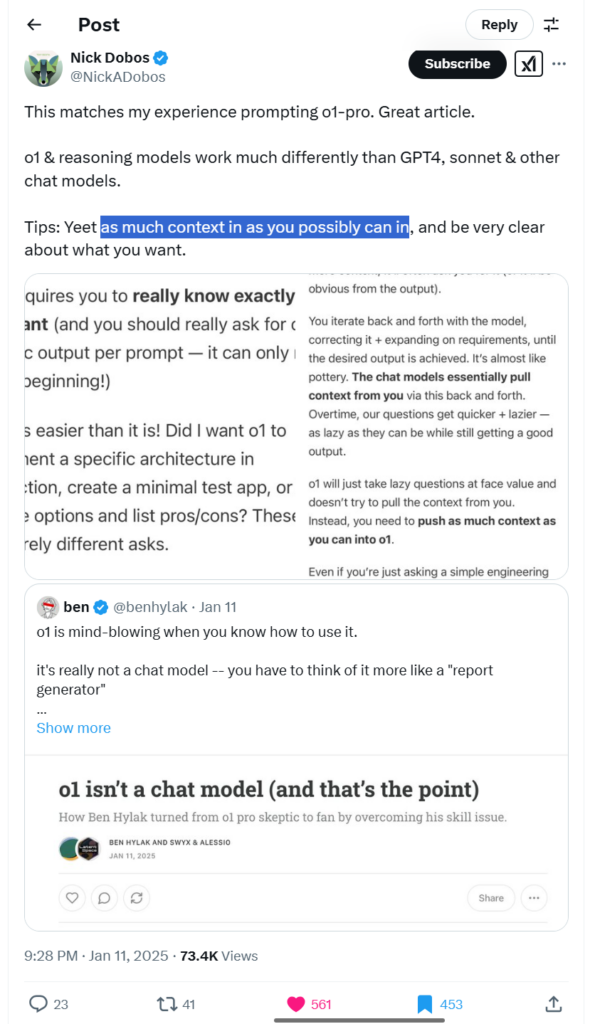

TIPS: When using reasoning models be simple and direct with your goal or question WHILE providing as much context or detail as possible.

This is still early days with reasoning models, so expect new uses and best practices to be developed over time. For deeper insights, Prof Ethan Mollick’s recent work on reasoning models provides excellent context – particularly his December 2024 summary “What just happened“2 and his earlier introduction to reasoning models from September 2024, “Something New: On OpenAI’s ‘Strawberry’ and Reasoning.”3

Source: Nick Dobos, @NickADobos, https://x.com/NickADobos/status/1878267872079937637.

Footnotes

- Steve Little, “I Asked ChatGPT’s New Reasoning Model to Generate a Research Plan” – AI Genealogy Insights blog, https://aigenealogyinsights.com/2024/12/06/i-asked-chatgpts-new-reasoning-model-to-craft-a-research-plan-heres-what-happened/ (December 2024) ↩

- Ethan Mollick, “What just happened” – One Useful Thing blog (December 2024) ↩

- Ethan Mollick, “Something New: On OpenAI’s ‘Strawberry’ and Reasoning” – One Useful Thing blog (September 2024) ↩

One thought on “Quick Update on AI “Reasoning” Models as OpenAI Releases New o3 Variants”

Comments are closed.