This is the first of three “Fun Prompt Friday” posts to start the new year, introducing a set of AI tools to empower researchers with the basic AI skills to advance their family history research.

The basic unit of AI genealogy is the USE CASE, a task that a generative model can accomplish as well as the average person. An example of a use case is prompting a language model to extract all factual statements from a text (by text I mean any chunk of words, not an SMS message you send with your phone); a specific example might be to prompt a chatbot to extract all biographical, genealogical, historical, and cultural statements from an obituary, and the chatbot responds by generating a structured, meaningful list of claims in the form “LABEL: Value,” for example, “DATE_OF_BIRTH: 28 Feb 1943” and dozens or hundreds of other pieces of information extracted from a source text provided by the researcher to the chatbot.

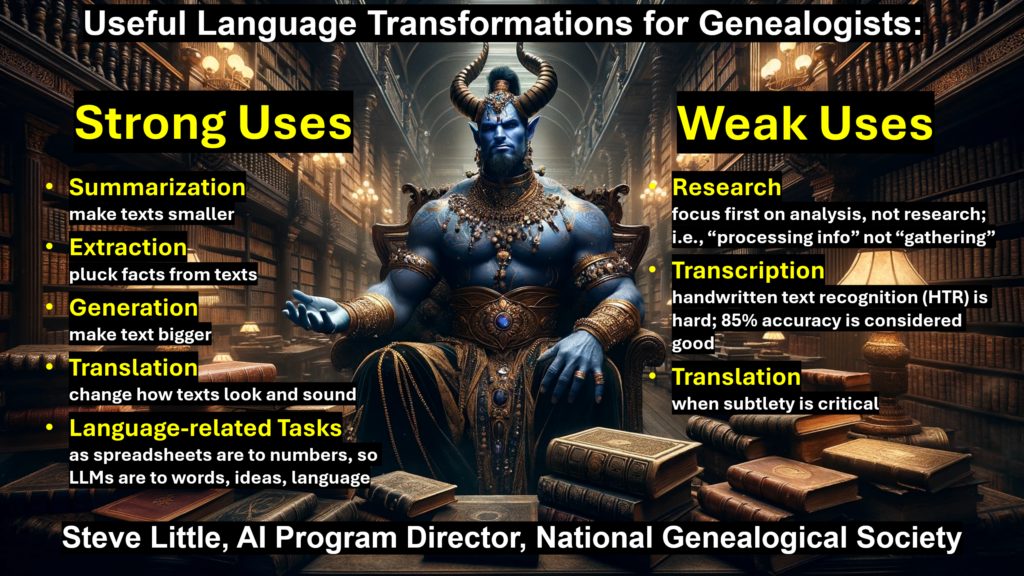

Extracting structured data from unstructured text is just one of dozens or hundreds of use cases available to today’s researcher. Generally, some use cases are accomplished by the AI with such high success and reliability that those tasks can responsibly and effectively be shared or perhaps even offloaded to an AI (with reasonable care and oversight). I call these powerful and reliable use cases “Strong.” (I describe as “Weak” the use cases that are less reliable, are harder to perfect, or require expertise or advanced training; these “Weak” use cases, such as fact-gathering or subtle language translation, may not be impossible, that is, they may be possible, but they increase the risk to the researcher and/or require a greater investment in time and experience.)

When we identify genealogical tasks in family history research that can be safely, accurately, and reliably performed in whole or in part by AI tools, then we recognize an opportunity to become a more effective and efficient researcher by automating that task. We automate that task by perfecting the prompt, saving the prompt, and re-using the prompt when it is appropriate.

As a researcher, writer, and teacher of AI-assisted genealogy, a behavior I witness among newcomers to AI genealogy is the attempt to run before learning to walk or even crawl. And, unfortunately, these tools—beyond the disclaimer ‘ChatGPT can make mistakes–check important info’—do not warn researchers about the risks of inexperienced users attempting Weak use cases. (To be clear, the risk is basing genealogical conclusions on inaccurate information.) This overreach is perfectly understandable and entirely forgivable: we hear of these wonderful new tools, and new users—lacking experience and reasonable, performance-based expectations—will aim for the stars, swing for the fences. When disappointing results are returned, the foolish user will dismiss the entire enterprise, the hard-headed user will insist on prematurely pursuing the Weak use cases, and the wise genealogist will resolve to master the basics, to learn the use cases where AI is safe, accurate, and reliable (and then use their mastery of the basics skills to conquer the Weak use cases).

Here is a hint: today’s chatbots are powered by a type of artificial intelligence called a “large language model” or LLM; for our purposes, the key word of that name is “language“—these tools excel at processing language: documents, articles, papers, chapters, pages, paragraphs, sentences, words. A second point to keep in mind is that accuracy is higher when the user provides text for analysis instead of relying on the AI to find and analyze text itself.

You, the wise genealogist, would like to explore the Strong use cases, so where to start? Today, I consider four basic language transformations Strong use cases: Summarization, Extraction, Generation, and Translation. Summarization is like turning coal into diamonds, distilling and condensing information into more useful forms. Extraction is like finding the gold needles in a field of haystacks, plucking facts from texts. Generation is making texts bigger, for example, turning a list of names, dates, places, relationships, events, and facts into stories, articles, papers, statements, arguments, proofs, and reports. I use the word Translation in a broader sense than traditionally understood, including not just the common meaning of rendering a text from one language to another, but also converting language for different times, purposes, and audiences. For example, Translation can mean turning Elizabethan English into modern English, legal jargon into plain language, scientific terms into everyday words, or even rephrasing plain sentences to sound like a Southerner, New Englander, or Southern Californian. Translation is all about changing how language looks and sounds while keeping the original meaning.

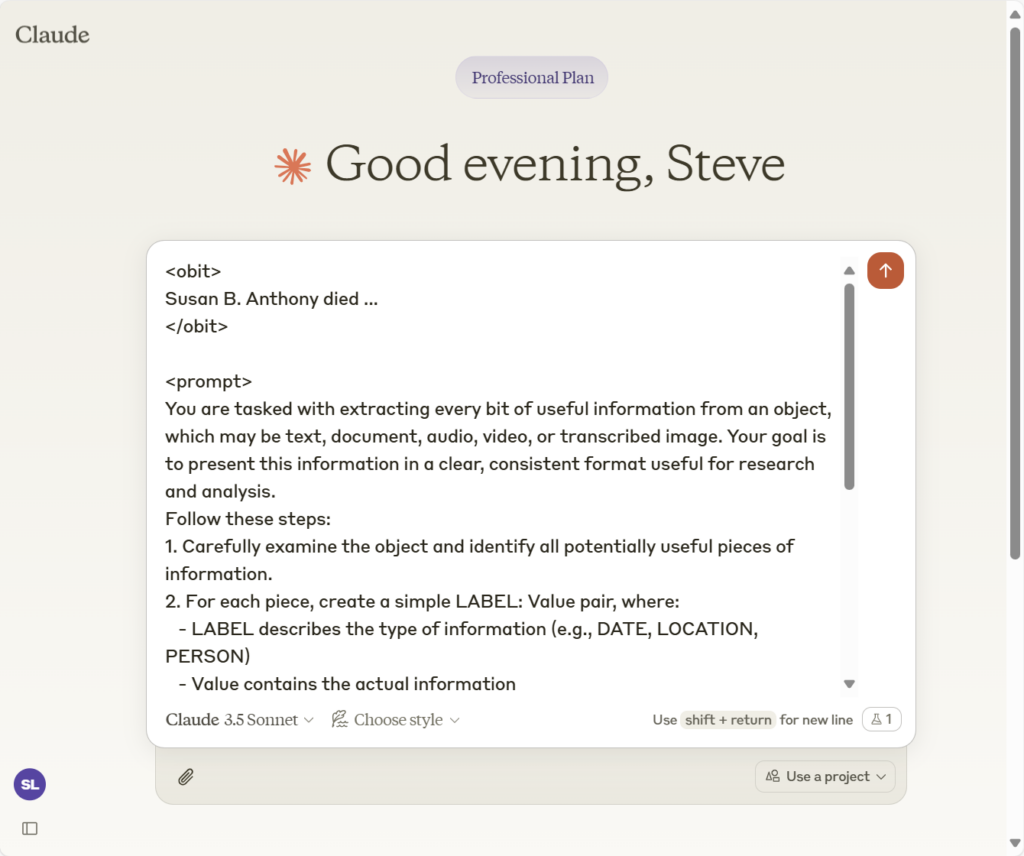

I’d like to share with you the prompt I currently use for Extraction. There are several ways you can access and use this Extraction prompt. First, below you will find the full text of the prompt, and you can copy-and-paste the prompt into your favorite chatbots: OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Gemini, and even Adobe Acrobat’s AI Assistant, Facebook’s Meta AI, X/Twitter’s Grok, or Microsoft’s Copilot. To use the prompt this way, you also include the source text from which the facts will be extracted; I usually place the source text above/before the prompt, as shown in the example below.

There is also an even easier way to use this prompt. Many AI vendors now allow users to create, save, and share their most useful prompts; at OpenAI these saved prompts are called “Custom GPTs,” at Anthropic they are called “Projects,” and at Google’s Gemini saved prompts are called “Gems.” OpenAI allows users with a paid subscription to create, save, and share their Custom GPTs with users without a paid subscription; that means anyone can use prompts saved as Custom GPTs, including free-tier users. I have made over 80 of these saved-prompt tools, most of which are freely available, both as full-text (with an open license and my blessing for you to use and modify as you’d like) and as free, online Custom GPTs. I’ll demonstrate both methods here.

First, here is the full text of my current extraction prompt.

<PROMPT>

You are tasked with extracting every bit of useful information from an object, which may be text, document, audio, video, or transcribed image. Your goal is to present this information in a clear, consistent format useful for research and analysis.

Follow these steps:

1. Carefully examine the object and identify all potentially useful pieces of information.

2. For each piece, create a simple LABEL: Value pair, where:

- LABEL describes the type of information (e.g., DATE, LOCATION, PERSON)

- Value contains the actual information

3. Use clear, descriptive labels that accurately represent the information type; avoid duplicate LABELs by being specific

4. If uncertain about information, prefix with "POSSIBLE_"

5. Present findings as a simple list, one item per line

6. If no useful information can be extracted, state this fact

Example formats:

BIRTH_DATE: 1945-05-08

DEATH_DATE: 2024-05-08

BIRTH_LOCATION: Berlin, Germany

DEATH_LOCATION: Richmond, Virginia

LEADER: Winston Churchill

POSSIBLE_OCCUPATION: Factory worker

Begin your analysis and present findings in this format.

<metadata>

TITLE: Steve's Facts Extractor, version 3

CREATOR: Steve Little; https://AIGenealogyInsights.com/

DATE: Friday 3 January 2025

LICENSE: This work is licensed under a Creative Commons BY-NC 4.0 License.

</metadata>

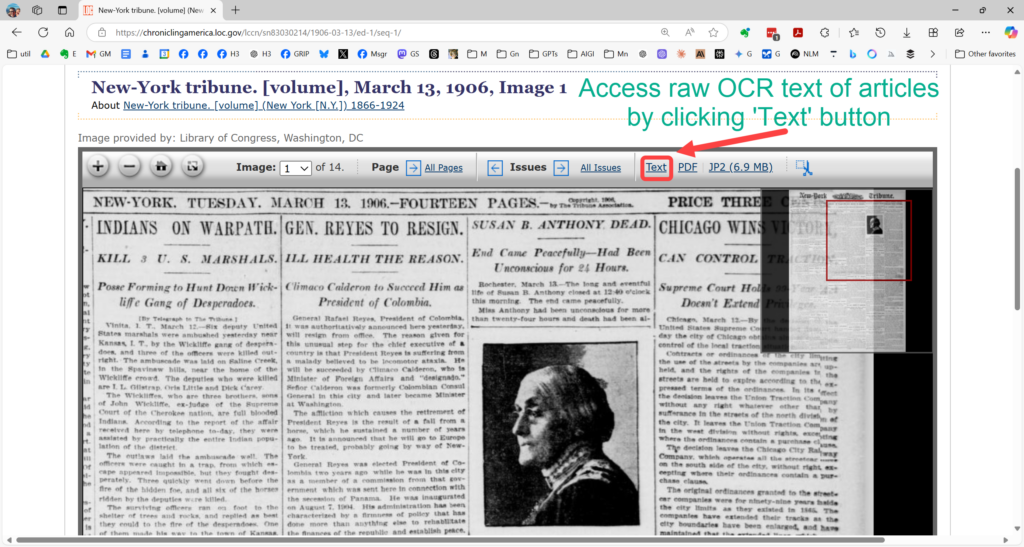

</PROMPT>As an example of how to use the prompt, let’s consider the obituary of Susan B. Anthony as found on the Library of Congress’s newspaper archive, Chronicling America, as it appeared in the New-York Tribune on the day of her death, Tuesday 13 March 1906 (the full-text of the profile is on page four, while the front page death notice is shown here, an important detail, as you’ll see soon).

The raw OCR text from most newspaper archives is an unmitigated horror, which explains why the indexes derived from them are so bad, and therefore why it is so challenging to find articles with most newspaper search tools. (Often an 85% OCR accuracy rate is considered average and acceptable, which doesn’t sound too bad—perhaps one word in eight misspelt and therefore incorrectly indexed—until you learn that that 85% accuracy rate is PER CHARACTER, not per word, meaning the per-word accuracy rate is closer to an abysmal 45%.)

So, the researcher has a choice, to use the error-prone raw OCR text for the fact extraction, or to attempt to clean the raw OCR text, improve its readability and accuracy, and use that corrected text with the extraction prompt. In my experience, the LLMs actually handle the messy, raw OCR surprisingly well. But I also have another Custom GPT just for cleaning raw OCR, so I use both methods (the see link below for my OCR Proofreading tool).

- Example of raw OCR of Anthony obituary being proofread:

https://chatgpt.com/share/67789a84-9148-8004-9920-6e949ce47b62 - Example of cleaned Anthony obituary used for fact extraction:

https://chatgpt.com/share/6778a64c-6bb4-8004-af18-657c7bdfa162

Note that when you use saved prompt tools such as Custom GPTs, Projects, and Gems, that you do not need to paste the prompt into the chatbot—the prompt is already in the tool for you to use.

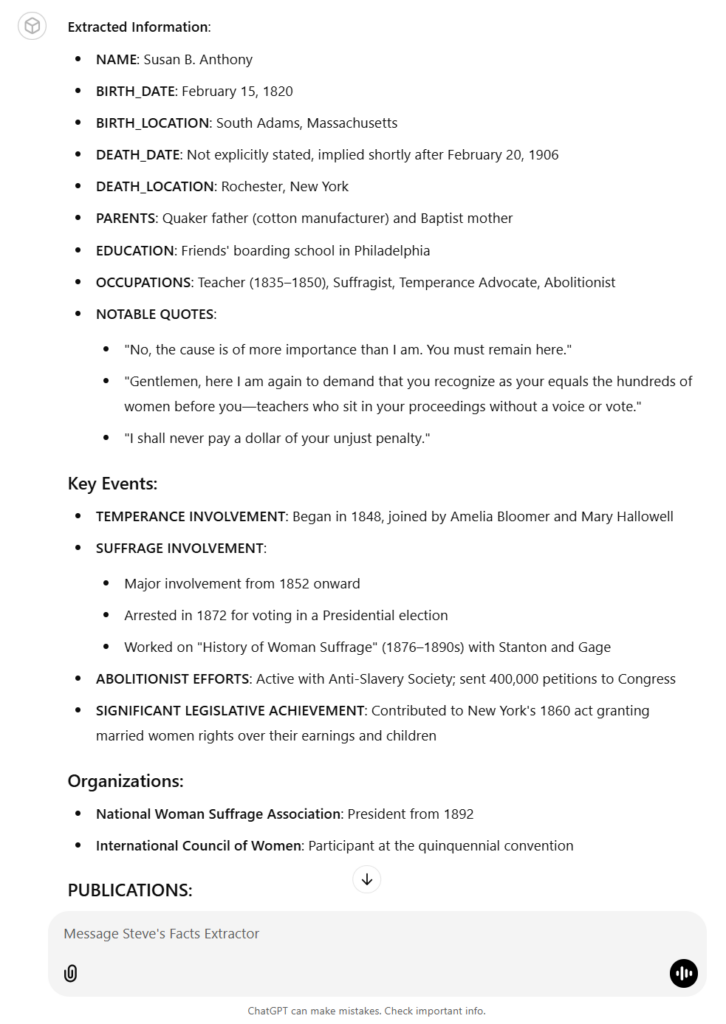

The image below shows the results of the extraction prompt; note the structured LABEL: Value format. This format ensures that the extracted information is richly saved for further analysis and use.

If you would like to modify the prompt, you are encouraged to do so (all my prompts are freely distributed with a CC 4.0 BY-NC license). This means that you can also use the prompt at any LLM, not just OpenAI’s ChatGPT. When you work with the full prompt, you provide both the source text and the prompt, usually in one message. Here is what that looks like if you place the source text before the prompt (my practice, although there is some discussion about this order; I prefer to keep my prompt closer to the inference point (where the next word being generated), so I finish with my prompt; in other words, by finishing with the prompt, the prompt is the last thing the AI “reads” before generating the next word in the response, which it builds one word at a time).

Here is how Anthropic’s Claude 3.5 Sonnet responded to the raw OCR text and our extraction prompt:

Anthropic's Claude 3.5 Sonnet:

Let me analyze this obituary and extract the key information in a structured format:

PERSON_NAME: Susan Anthony (also referred to as Miss Anthony)

BIRTH_DATE: 1820-02-15

BIRTH_LOCATION: South Adams, Massachusetts

DEATH_LOCATION: Rochester, New York

DEATH_YEAR: 1906

FATHER_BACKGROUND: Quaker, cotton manufacturer

MOTHER_BACKGROUND: Baptist

EDUCATION: Friends' boarding school in Philadelphia

OCCUPATION_TEACHER_DATES: 1835-1850

OCCUPATION_TEACHER_LOCATION: Various schools in New-York State

ACTIVISM_START_DATE: 1848

ACTIVISM_FOCUS_INITIAL: Temperance movement

KEY_ASSOCIATES: Mrs. Amelia Bloomer, Mrs. Mary Hallowell, Rev. Antoinette L. Brown, Elizabeth Cady Stanton, Matilda Joslyn Gage

SIGNIFICANT_EVENT_1: 1872 arrest for voting in Presidential election in Rochester

SIGNIFICANT_EVENT_2: 1876 began "History of Woman Suffrage" (completed after her 80th birthday)

ORGANIZATIONAL_ROLES:

● Vice-president-at-large of National Woman Suffrage Association (1869-1892)

● President of National Woman Suffrage Association (1892)

PUBLICATION_FOUNDED: "The Revolution" (women's suffrage newspaper)

PUBLICATION_LOCATION: New York City

PUBLICATION_DEBT: $10,000

LAST_PUBLIC_APPEARANCE: Celebration of 86th birthday in Washington, February 15, 1906

FINAL_ILLNESS: Blood clot on the brain, slight pneumonia

RESIDENCE_FINAL: Lived with sister Mary Anthony in Rochester

ACHIEVEMENTS:

● Helped secure married women's rights to earnings and child guardianship (New York legislature, 1860)

● Distributed 400,000 petitions to Congress for abolition of slavery

● Placed "History of Woman Suffrage" in 1,200 public libraries

● At age 76, conducted Pacific Coast lecture tour (three times daily)

● At age 83, attended International Council of Women convention

POSSIBLE_LAST_WORDS: "The cause is of more importance than I am" (said to Rev. Anna Shaw when declining accompaniment home during final illness)I hope you find this extraction prompt useful. I do encourage you to improve upon it.

As I mentioned, this is the first in a set of three Fun Prompt Fridays. Next week, Fri 10 Jan 2025, I will share a prompt that compliments this extraction prompt. And in two weeks, on Fri 17 Jan 2025, I’m really excited to share a video with you, demonstrating something that I don’t believe anyone else is yet teaching.

This effort is in support of my 2025 AI Genealogy Do-Over, about which you can read more here, https://aigenealogyinsights.com/2025/01/01/the-2025-ai-genealogy-do-over/, including a “52 Ancestors in 52 Weeks” component. I look forward to sharing this work with you in the seasons to come, and I’d love to hear about your experiences. If you are interested is this discussion, there are more than 8,500 folks in Blaine Bettinger’s Facebook group “Genealogy and Artificial Intelligence (AI)” where these discussions happen daily. I hope to meet you there.

Sources:

- “Miss Anthony’s Life: Foremost Woman Suffragist Beloved by All Who Knew Her.” New-York Tribune (New York, NY), 13 March 1906. Chronicling America: Historic American Newspapers, Library of Congress. https://chroniclingamerica.loc.gov/lccn/sn83030214/1906-03-13/ed-1/seq-4/. Accessed: Fri 3 Jan 2025.

Tools:

- Steve’s Fact Extractor, the Custom GPT powered by the extraction prompt above:

https://chatgpt.com/g/g-KQgmxiHqC-steve-s-facts-extractor - Steve’s OCR & Proofreading Tool, a Custom GPT powered by a proofreading prompt:

https://chatgpt.com/g/g-gkY4MAtMb-steve-s-ocr-proofreading-tool - Remember: when using other people’s saved prompt, the prompt is already built into the tool, so you can just submit your information to be processed; if a saved prompt balks, you can simply prompt, “Process as instructed.” Remember, too, that you can continue a chat with a Custom GPT, in which case it is normal to prompt as the context suggests (that is, chat with your chatbot, iterating through a conversation with prompt and response, prompt and response, etc.).

Extras:

- Example of a Custom GPT which attempts to clean raw OCR text, here exemplified with the Susan B. Anthony obituary used above; in this example, you can see both the raw OCR text and the cleaned version provided by GPT-4o:

https://chatgpt.com/share/67789a84-9148-8004-9920-6e949ce47b62

You can use this tool to clean your own raw OCR discoveries; just select the New Chat option from the drop-down menu.

2 thoughts on “Fun Prompt Friday: Extraction”

Comments are closed.