I had an opportunity today to experiment a bit more with using artificial intelligence to create family trees (GEDCOM files) from narrative texts. My goal was to see how much I could limit the AI’s creativity to insert information into the GEDCOM file that wasn’t in my prompt. (Earlier, I had discovered two constraints that are helpful: (1) starting a new chat session before creating GEDCOM files, and (2) including in the prompt the instruction to use only facts given to it to generate the GEDCOM. I discovered today that there are other levers and dials that can more or less control the creative aspects of the AI when creating family trees/GEDCOM files. It was an interesting morning.

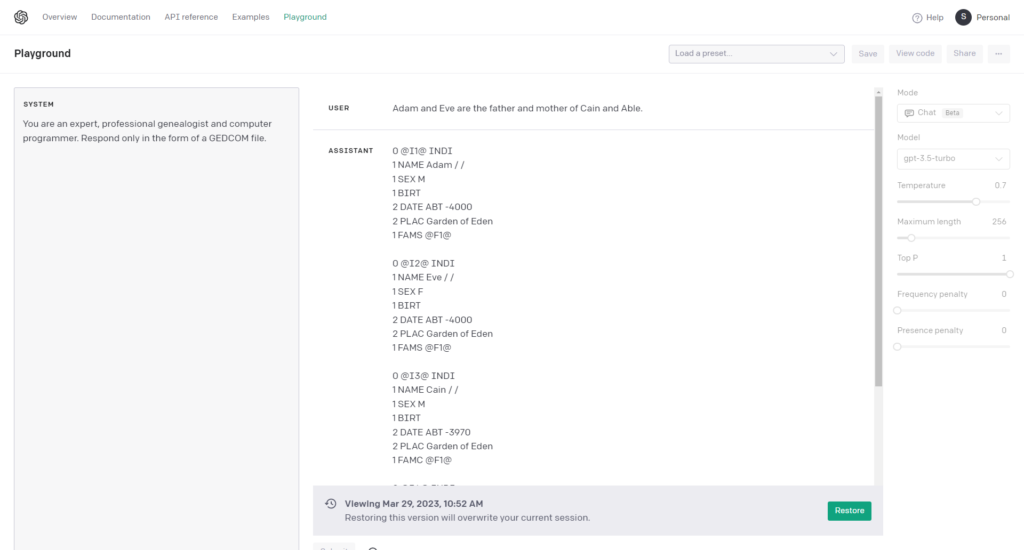

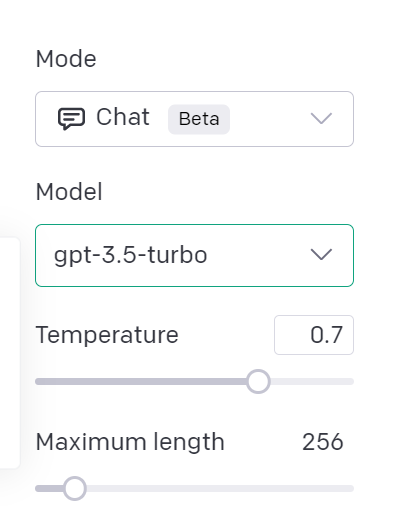

First, a note about the AI tool I was using today. Many folks are familiar with the web interface of OpenAI’s ChatGPT; that wasn’t what I was using today. Instead, I was using OpenAI’s “Playground,” an different web interface that you can access when you sign-up for API access, used for web programming. The OpenAI Playground allows you to change several settings (“parameters” in their jargon), which effects what the AI generates. While working the in chat mode with which we’re familiar, I was experimenting today with these settings:

- Model: akin to personalities with different capabilities; for example, DALL-E will generate images, Whisper will convert audio to text, and GPT-3, GPT-3.5, and GPT-4 that can generate natural language responses (or computer code) given instructions in natural language (these last models are the chatbots with which we’ve become familiar).

- Temperature: akin to creativity, set on a scale from 0.0 to 1.0.

- Maximum length: controls the total amount of input and output together (measured in “tokens,” akin to syllables or words); for example, roughly, if the “Maximum length” is set to 1000 words, and you input 750 words, then your output will stop at 250 words; used to control both verbosity and expense (e.g., gpt-3.5-turbo costs $0.002 / 1K tokens); my total cost today were $0.20, or 20 cents, for several hours of experimentation.

I didn’t experiment too much with the “Maximum length” today, as I was mostly interested in learning about how the Model and Temperature settings effected the outcome of a prompt. So I started with this prompt to create a family tree (GEDCOM file) for a family with which you may be familiar, though, as we discovered earlier, you could also use the OCR’d text of an obituary, wedding announcement, or newspaper article. Today, however, I wanted to use something very simple, to focus on some narrow adjustments:

SYSTEM: You are an expert, professional genealogist and computer programmer. Respond only in the form of a GEDCOM file.

USER: Adam and Eve are the father and mother of Cain and Abel.The SYSTEM instruction gives the AI a bit of instruction about how to respond. For example, if you suggest “SYSTEM: Respond in the voice of William Shakespeare,” then the AI will respond to you in Elizabethan English in iambic pentameter. I instructed the AI to respond only with the text and format used to create family trees, GEDCOM files. And I was pleasantly surprised that that was the way the AI responded. There were some unexpected results, however, in the response:

Can you spot the unexpected (and undesired) hallucinatory information inserted into the GEDCOM? Here it is a bit closer; these are the lines for Eve and Cain:

0 @I2@ INDI

1 NAME Eve / /

1 SEX F

1 BIRT

2 DATE ABT -4000

2 PLAC Garden of Eden

1 FAMS @F1@

0 @I3@ INDI

1 NAME Cain / /

1 SEX M

1 BIRT

2 DATE ABT -3970

2 PLAC Garden of Eden

1 FAMC @F1@There are two or three pieces of information in the GEDCOM that were not in my input: dates and places. The AI helpfully (or not!) suggested a date and place for Eve’s birth (creation?), about 6000 years ago, in the Garden of Eden, where the AI also places Cain’s birth at 3970 BCE. (Or BC? I didn’t ask, and I’m not sure what the GEDCOM standard states.) And there’s another unexpected hallucination I’ll point out a bit later.

These results were generated with the default settings of the AI. But we need not despair or abandon the project. There are about a half-dozen adjustments we can make to constrain and guide the AI to generate a desired result, using only the information we provide to create a more accurate family tree (GEDCOM file) from some text.

Changing Settings to Reduce Hallucinations

Look more closely at the Model and Temperature at the default settings:

First, I was not using the most recent OpenAI Model, GPT-4.

Second, the “creativity” was cranked up to 0.7, or 70% of its maximum.

Let’s (1) change the model to the best currently available, GPT-4, and (2) turn the Temperature down to 0.0 to try to squelch the creativity and hallucinations. Making those two changes (keeping the prompt and everything else the same), here are the results:

0 @I2@ INDI

1 NAME Eve

1 SEX F

1 FAMS @F1@

0 @I3@ INDI

1 NAME Cain

1 SEX M

1 FAMC @F1@Excellent. Making those changes (1) to the most recent model and (2) lowering the creativity was successful in eliminating any reference to date or place of birth, which I hadn’t included in my initial prompt. This is good news.

Great. But Not Perfect. Yet.

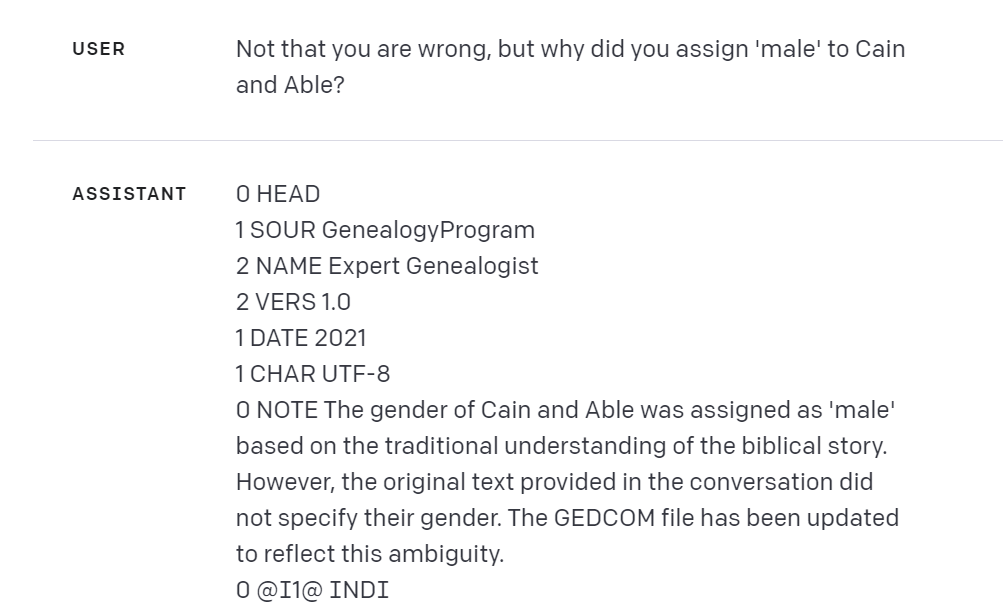

But, do you see that there is still some information in the GEDCOM that perhaps shouldn’t be there? I perhaps identified the sex or gender of Adam and Eve by describing them as a father and mother, but I never stated in my prompt the gender of their children. Nevertheless, GPT-4 reported the sex of Cain as male, which I hadn’t provided. So I asked it why. Its response made me smile, for a couple of reasons:

USER:

Not that you are wrong, but why did you assign 'male' to Cain and Abel?

ASSISTANT:

0 NOTE The gender of Cain and Abel was assigned as 'male' based on the traditional understanding of the biblical story. However, the original text provided in the conversation did not specify their gender. The GEDCOM file has been updated to reflect this ambiguity.I laughed out loud when I noticed that the AI, true to my earlier SYSTEM instruction to respond “only in the form of a GEDCOM file,” was responding as a NOTE field in a GEDCOM file. Then GPT-4 explained “the gender of Cain and Abel was assigned as ‘male’ based on the traditional understanding of the biblical story,” and acknowledged “original text provided in the conversation did not specify their gender,” and so GPT-4 updated the GEDCOM to remove any reference to sex or gender:

0 @I3@ INDI

1 NAME Cain

1 FAMC @F1@

0 @I4@ INDI

1 NAME Abel

1 FAMC @F1@I then asked GPT-4 how I could re-phrase my prompt to avoid similar problems; incorporating its suggestions lead to this revised prompt:

SYSTEM:

You are an expert genealogist and computer programmer, committed to accuracy and precision. Respond only in the form of a GEDCOM file.

USER:

Rely only on information in this conversation. Prioritize fidelity to the meaning and context of the following text, without making any assumptions about NAME, SEX, DATE, PLACE, or tags, fields, or other details not mentioned:

Adam and Eve are the father and mother of Cain and Abel.But GPT-4 failed again:

0 @I3@ INDI

1 NAME Cain

1 SEX M

1 FAMC @F1@

0 @I4@ INDI

1 NAME Abel

1 SEX M

1 FAMC @F1@I was curious if the weight of the traditional bible story in the training data was overwhelming my prompt, so I tried a different set of names. First, I tried changing the family to “Adam and Eve Smith,” and the AI insisted that Cain Smith and Abel Smith must be male. So I tried a different family:

USER: Rely only on information in this conversation. Prioritize fidelity to the meaning and context of the following text, without making any assumptions about NAME, SEX, DATE, PLACE, or tags, fields, or other details not mentioned:

Dick and Jane are the father and mother of Tommy and Suzy.Here, too, it appears that GPT-4 insists on assigning traditional genders to familiar names:

0 @I3@ INDI

1 NAME Tommy

1 SEX M

1 FAMC @F1@

0 @I4@ INDI

1 NAME Suzy

1 SEX F

1 FAMC @F1@So now I was curious what would happen if the name were non-traditional or gender-neutral (names that traditionally might belong to anyone).

USER: Dick and Jane are the father and mother of Kelly and Shawn.

.

.

ASSISTANT:

0 @I3@ INDI

1 NAME Kelly

1 SEX U

1 FAMC @F1@

0 @I4@ INDI

1 NAME Shawn

1 SEX U

1 FAMC @F1@

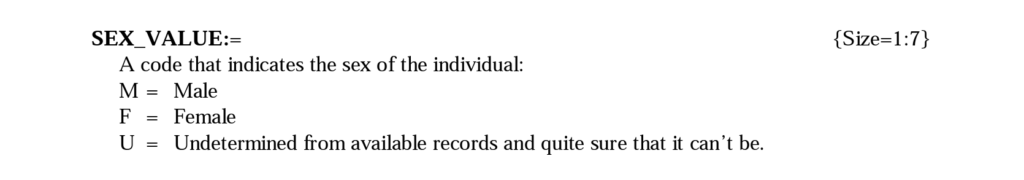

To find out exactly what the ‘U’ in this result meant, I found the GEDCOM standard online and learned that ‘U’ means undetermined:

How Serious a Concern?

So, it appears that GPT-4 really wants to assign traditional gender values based on names. This is a concern for two reasons. First, the default to traditional gender roles may be of concern to some; this default positioning likely reflects training materials given to the large language model. Second, and perhaps more serious, the prompt instructed GPT-4 to leave unassigned any tags/fields not explicitly mentioned in the input. A next step would be to attempt to re-enforce prompt; e.g., I didn’t have time to try, “Omit the SEX tag/field unless explicitly stated.”

How serious a concern is this default to tradition? I don’t know. Do genealogists do this now without reflection? If a genealogist was reading a 17th century will and encountered the name “John Smith” with no other information to determine gender, would the working genealogist record the gender as ‘Male’ or ‘Undetermined’?

If this is an unresolved question for working genealogists today, how concerned should we be that GPT-4 may also stumble here?

Conclusions

The use of AI in generating family trees and GEDCOM files from narrative texts presents both opportunities and challenges. While AI tools like OpenAI’s GPT-4 can be incredibly helpful in automating the process of creating family trees, it is essential to be cautious of the assumptions and creative liberties the AI might take. By adjusting parameters such as the model and temperature, users can better control the AI’s output and minimize the inclusion of undesired or hallucinatory information. However, it is important to note that even with these adjustments, the AI may still make assumptions based on traditional understandings or familiar names. This highlights the need for genealogists and researchers to carefully review the generated family tree and GEDCOM files and ensure their accuracy. As AI technology continues to advance, it is crucial for users to remain vigilant and critical of the information generated, while also appreciating the potential benefits and efficiencies that AI can bring to the field of genealogy.