In November 2022, we were all thrust into an extreme position; but we neither have to stay there, nor lurch from one extreme to the other. There is a middle way (actually, many). This week on "The Family History AI Show" podcast, Mark and Steve discuss this and other topics in AI-assisted family history: https://blubrry.com/3738800/ (episode #2 available Tuesday 11 June 2024).Introduction

Artificial intelligence (AI) has rapidly become a significant tool in various fields, including genealogy. This week on The Family History AI Show podcast, Mark Thompson and Steve Little explore the critical discussions surrounding the use and disclosure of AI in the genealogy community, highlighting key points from recent debates and developments.

The Importance of AI Use and Disclosure in Genealogy

The integration of generative AI in genealogy has sparked essential conversations about its role and the need for transparency. As AI tools generate text, audio, images, and videos, genealogists must consider who is creating the content they rely on, and the content they create. From the outset, there’s been a pressing discussion about when it’s appropriate to use these tools and when disclosure of their use is necessary. This topic is crucial not only for family historians and genealogists but also for anyone concerned with the authenticity and accuracy of historical records.

Context and Current State

Generative AI burst into wider public consciousness 18 months ago, bringing with it a host of concerns and questions. Since then, the genealogy community has grappled with two extreme positions: a laissez-faire approach with no rules (where we were thrust by default in November 2022), where every person acts independently, and at the other extreme, a complete prohibition of AI tools. Moreover, prohibiting AI use entirely often leads to surreptitious use of these tools due to their powerful advantages (as observed in Fortune 500 companies) or people leave organizations with unreasonable, extremist demands. These extremes underscore the need for a balanced approach to AI use and disclosure, as the community continues to debate the best path forward.

A Middle Ground: The “Human Rule”

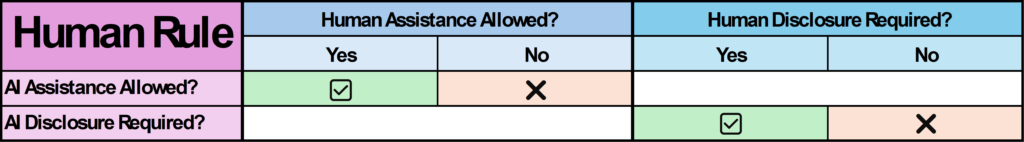

To navigate the complexities of AI use and disclosure, a middle ground known as the “human rule” has been proposed. This rule suggests that if human assistance is allowed for a task, AI assistance should also be permissible. Similarly, if disclosure is required when a human helps with something, it should also be required if AI is used. This approach aims to provide a sensible framework for AI use without resorting to extremes. (The coiner of the phrase, Steve Little, dislikes the name he chose, as it seems to suggest a currently unwarranted elevation of AI.) This middle way is not intended as a permanent solution, nor a one-size-fits-all position, but rather as a temporary, non-extremist starting point while individuals and organizations think more deeply about their values and needs.

while individuals and organizations find their footing, by Steve Little.

However, the heuristic is not without its limitations. For example, in Blaine Bettinger’s Facebook group, “Genealogy and Artificial Intelligence (AI),” there’s a sensible rule that AI-generated images must be labeled as such, even though we don’t require the same for human-created photographs (but perhaps we should, and marking unknown sources as such).

AI Integration in Mainstream Software

AI is becoming increasingly integrated into everyday software tools, making it challenging to prohibit its use. Tools like Grammarly and spell checkers have used AI for years without requiring disclosure, as their assistance is often minor. Now, large language models and other generative AI are being built into mainstream software like Word and Excel, where their impact can be more substantial. By the end of this year, it will be difficult to find mainstream software that doesn’t include direct support by a large language model and generative AI. While not all AI assistance needs to be disclosed, it becomes crucial when the assistance is substantial and materially impacts the outcome. Avoiding AI in software by 2025 will be nearly impossible, akin to saying no to software itself.

Autonomy in Rule-Making

Family historians have the autonomy to make personal decisions about AI use, especially those not bound by organizational rules. Different organizations and societies within the genealogy community will establish their own guidelines, reflecting their unique needs and values. Even within larger organizations, rules may vary between departments, underscoring the importance of tailored approaches. These choices and decisions won’t always be easy, and those responsible—family historians, genealogists, educators, administrators, editors, employers, boards—should be extended grace while they educate themselves and discern their paths forward.

Conclusion

As AI continues to evolve, finding a balanced approach to its use and disclosure in family history is crucial. The “human rule” offers a sensible starting point, but ongoing dialogue and adaptation will be necessary. By navigating these challenges thoughtfully, the genealogy community can harness the benefits of AI while maintaining transparency and integrity in their work.

If human help is allowed for a task,

AI Genealogy Insights

AI should also be permitted.

If disclosure is required for human help,

it should also be required for AI.

One thought on “Avoiding Extremism: The Use and Disclosure of AI in Genealogy”

Comments are closed.